Table of Contents

What is Technical SEO?

At its core, technical SEO aims to enhance your website’s code and file structure, enabling search engines to effectively crawl, index, and comprehend your site. It also aims to provide users with a good experience, facilitated by straightforward site navigation, well-placed internal links, and pages that load quickly and without issues.

A weak technical SEO foundation can significantly limit the effectiveness of your other SEO initiatives, such as content creation and optimization. If search engines can’t discover, crawl, index and understand your site, all the content in the world will do you no good.

So, what are some typical tasks search engines do?

- Discover your website

- Determine which pages they are allowed to crawl and index

- Crawl your website to discover new and existing pages to index

- Revisit pages to detect changes since the last crawl

- Identify the authoritative version of a page in cases of duplicate or near-duplicate content

- Determine the appropriate redirect destination for outdated content or broken links

- Understand the overall context and theme of your website

- Understand the relationship between various pages

- Evaluate pages for eligibility for special Search Engine Results Page (SERP) features, also known as rich results

- Evaluate page loading speed and performance

If search engines struggle with these tasks, your website’s performance in search rankings will be adversely affected. Essentially, if they can’t access and understand your content, you won’t stand a chance at competing in the search results.

The Role of a Technical SEO Audit

How do you make sure your site is accessible and indexable by search engines? You start with a technical SEO audit, which is key in identifying issues that prevent search engines from effectively crawling and indexing your website.

The audit process typically involves the following steps:

- Initial Website Crawl: This step mirrors the search engine’s approach to website crawling. Using an SEO crawling tool such as Screaming Frog or SiteBulb, you perform a comprehensive crawl of your website. The crawl discovers all your web pages and puts them into a master list, which includes detailed information like status codes, indexability, crawler directives, and more.

- Issue Identification: Once you have a centralized list of your site’s pages and information, you’ll need to identify specific issues. To keep everything organized, you can categorize each issue based on their nature – whether they pertain to robots.txt, sitemap files, or links, for instance. Each issue is placed under a corresponding spreadsheet tab, complete with supporting details that will help with the analysis.

- Issue Analysis: The collected data is generally reliable, but occasionally, crawling tools can flag non-issues. You want to manually verify the impact and context of each issue to avoid working through a list of issues that aren’t problematic. For example, a page blocked from indexing isn’t inherently problematic if it was intentionally blocked. This is something that cannot be verified without a manual review.

- Issue Prioritization: After cleaning the data to include only genuine issues, the next step is to prioritize them. This can be as straightforward as labeling each issue as critical, high, medium, or low priority. Some issues, like URLs appearing in multiple sitemaps, should eventually be addressed but likely won’t have a substantial impact on your results. Prioritization should instead focus on critical issues that prevent search engines from crawling and indexing your pages, such as the presence of a noindex tag on important pages or JavaScript/CSS files being blocked from crawlers in the robots.txt file.

- Implementation: With a clear, prioritized list of issues, you methodically address each one, starting with those affecting crawling and indexing, and progressing to issues that are simply not following SEO best practices.

Our Unique Approach to Technical SEO Audits

With you now having a basic understanding of the technical audit process, let’s take a look at our unique approach. While numerous technical SEO checklists are available online, most fall short in the comprehensiveness of issues to check. Additionally, a checklist will do you no good if you don’t know how to use (or have access to) crawling tools and have the technical knowledge to implement the changes.

Our audit process has been developed in-house, combining our extensive experience with standard industry checks and including critical yet frequently overlooked issues. This approach ensures a thorough and effective audit, surpassing what conventional checklists can offer.

By partnering with us, you’ll also benefit from our industry experience, and enjoy the peace of mind that comes from knowing your SEO needs are in capable hands.

Your Audit Breakdown

Let’s take a look at just some of the issues we look for in our technical audits.

Sitemaps

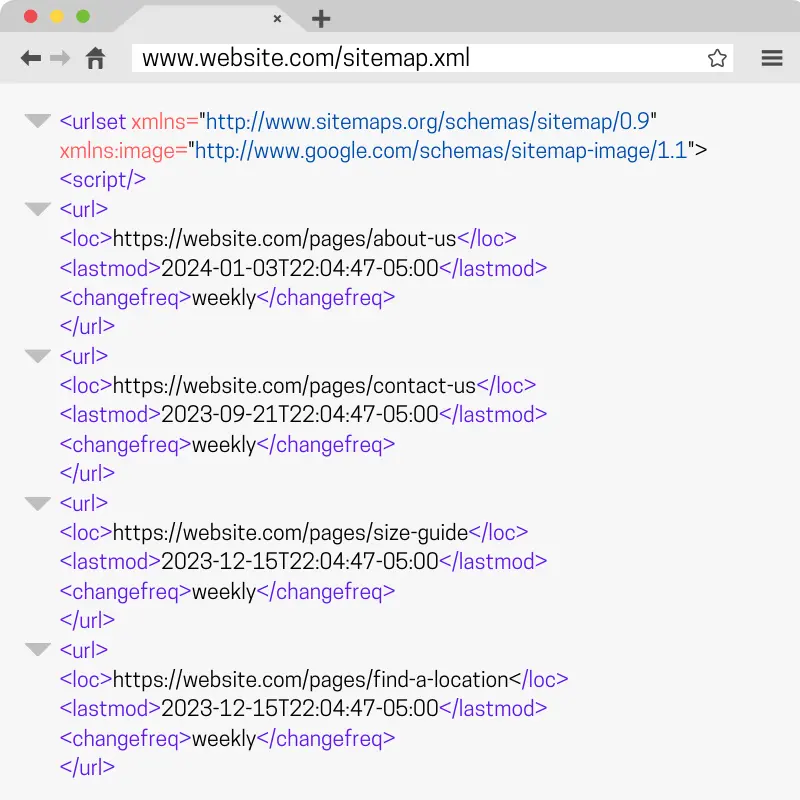

A sitemap is a list of all the important pages you want search engines to index. While search engines can generally discover and index pages through standard crawls, sitemaps play a vital role in providing additional signals that help them in this process.

However, just having a sitemap in place isn’t enough. Sitemaps need to be submitted to search engines, and they have a set of requirements and best practices that need to be followed. In the worst-case scenario, using a sitemap that has not been optimized may even lead to search engines choosing to stop crawling your sitemap, leading to less efficient crawling, categorization, and indexing delays.

Sample Issues to Check

- Is the sitemap correctly formatted and recognized by search engines?

- Do all URLs in the sitemap return a 200 status code, and are the URLs indexable?

- Are there important URLs not included in the sitemap that should be?

- Does the sitemap contain orphan URLs that lack internal links, limiting their visibility and reach?

- Is the sitemap configured to update automatically, reflecting changes on your website?

Internal Linking

Internal links allow users and search engines to navigate through your website. When your site’s pages are connected via internal links, search engines can more easily discover and interpret your site’s content, while users can quickly find the information they’re looking for.

That sounds simple enough, but how you choose to internally link is essential to unlocking their full potential.

For example, search engines use anchor text (the visible text in a hyperlink) to understand how different pages are related, and to gather context about the information contained in the linked page, further helping them understand and categorize content across your site. Additionally, internal links contain attributes that if not properly implemented, can significantly impede your internal linking strategy.

Top it off with links leading to broken pages or multiple links linking to different versions of the same page, and you’ll find that internal links are anything but easy to maintain.

Sample Issues to Check

- Are there important pages located more than 4 clicks away from the homepage?

- Are there any internal links marked as nofollow?

- Are there any broken internal links?

- Are there any pages with no incoming internal links?

- Are there any instances of exact-match anchor text being overused?

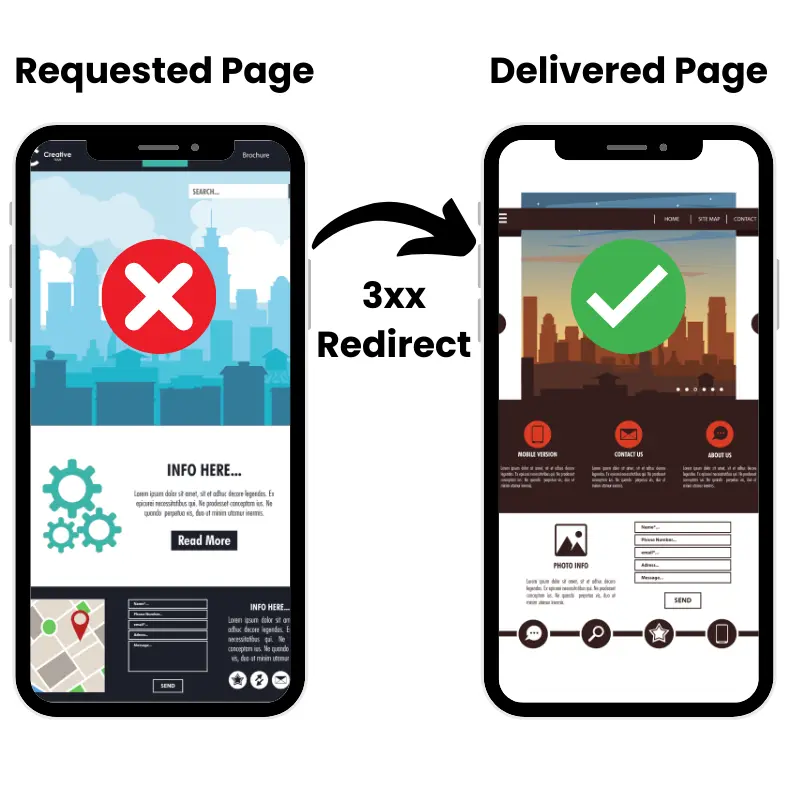

Redirects

Redirects play an essential role in ensuring that users consistently land on the correct page, even when they follow a link to a page that has moved or no longer exists. This functionality is particularly important for external links – those found on other websites outside your control. When you implement redirects effectively, you can preserve and transfer SEO equity to a different page, maintaining your site’s search ranking and visibility.

In the context of internal links – links within your own website – the use of redirects is generally redundant. Since you have direct control over your site’s content, you can update any internal links to point directly to the new URL. Regularly auditing and updating internal links to ensure they point to current URLs helps in avoiding unnecessary redirects, which can slow down page loading times and impact user experience negatively.

Sample Issues to Check

- Are there any internal redirects?

- Are there any redirect chains or loops?

- Are redirects set to the appropriate 3xx status code?

- Are pages redirected to an appropriate replacement page?

Canonicals

Canonical tags signal to search engines which version of a page should be considered authoritative for indexing purposes. This is particularly relevant in scenarios like ecommerce websites, where a product might be listed under multiple categories. For example, consider a red shirt that is part of both a sale category and general shirts category.

In this case, the product might be accessible via two different URLs:

www.example.com/on-sale/red-shirt

www.example.com/shirts/red-shirt

Search engines tend to avoid displaying duplicate content from the same website in search results. Given that both URLs likely contain very similar content, it creates ambiguity about which version should be indexed and shown in search results. This is something that you don’t want to leave up to search engines to decide.

By using a canonical tag, you can designate one of these URLs as the authoritative source. This helps search engines understand which page to index, while still retaining the value of having multiple URLs for navigation and user experience on your site. In addition, this consolidates SEO signals into a single page which can help with ranking.

However, like many aspects of technical SEO, there are nuances to canonical tags. Behind-the-scenes factors, such as conflicting or missing canonicals, can impact the effectiveness of these tags, making it important to implement and monitor them carefully to ensure they function as intended.

Sample Issues to Check

- Are there any pages with missing or multiple canonical tags?

- Are there any non-indexable canonical tags?

- Do paginated links canonicalize to the appropriate page?

Schema

Have you ever noticed search results that include more than the standard blue link – such as star ratings, review counts, event dates, or video snippets? These enriched features – also known as rich results – are the result of implementing schema markup on a website.

Schema markup, a type of structured data, plays a pivotal role in enhancing your site’s listing in search engine results. When correctly implemented, it enables your site to display these additional, eye-catching features. This not only makes your listing more visually appealing but can also significantly boost click-through rates (CTR).

Sample Issues to Check

- Is the site currently using schema?

- Does the schema accurately reflect what’s on the page?

- Is the schema using all relevant properties?

- Are there any schema validation issues?

Image Optimization

Let’s face it – no one gets excited about encountering a lengthy, uninterrupted wall of text, no matter how good the content may be. In fact, people may choose to skip your content altogether if it looks like a college essay rather than an informative, engaging article.

One solution to increase engagement and encourage reading is to include images, which significantly contribute to the user engagement and visual appeal of your content.

However, incorporating images requires more than just inserting them into your content. You need to consider both their impact on page load times and how search engines interpret them to maximize benefits while mitigating any drawbacks.

For example, images with a large file size can significantly slow down the loading speed of a page. This slowdown can negatively impact user experience, potentially decreasing engagement as visitors may leave the site before the content fully loads. For search engines, properly naming image files and adding descriptive alt text helps them understand what’s in each image and can help you rank in image search.

Sample Issues to Check

- Are images using a modern format, such as WebP?

- Are images smaller than 100kb?

- Do images contain descriptive alt text?

- Are file names representative of the image content?

- Are images out of the initial viewport lazy loaded?

URL Structure and Optimization

URLs often receive less attention than they deserve when it comes to technical SEO optimization. Typically, a URL is set when a page is first created and then rarely altered, if ever. This is generally a sound practice because altering the URL of an existing page can lead to the creation of a broken link (the old URL), potentially stripping the page of its accrued SEO benefits unless a proper redirect is implemented.

Furthermore, a URL change is perceived by search engines as the creation of a new page, which must then build its SEO value from scratch. This challenge, however, can again be mitigated through implementing proper redirection.

So while we don’t want to change URLs unless absolutely necessary, there are instances where it can be advantageous.

Consider the following example of a poorly structured URL for a merino wool shirt:

www.example.com/product_92773

This URL is ineffective for two key reasons: it fails to describe the product, and it uses an underscore, which search engines can misinterpret. In such cases, the benefits of modifying the URL to be more descriptive and search-engine-friendly can outweigh the risks associated with the change.

A more optimized URL could be:

www.example.com/merino-wool-shirt

This URL is clear to both users and search engines, making it both user-friendly and more conducive to SEO, thereby justifying the URL change. Pair it with a redirect from the old URL to the new URL, and we’re all set.

Sample Issues to Check

- Do URLs contain both the target keyword and descriptive keywords?

- Do any URLs overuse keywords?

- Do any URLs contain problematic characters, such as an underscore?

- Were any URLs changed and need to be redirected to the new URL?

Mobile Optimization

Despite Google’s announcement of the mobile-first indexing initiative several years ago, many websites continue to prioritize desktop-focused optimization. This approach overlooks a critical shift in user behavior and search engine algorithms.

Mobile-first indexing means that when a search engine like Google indexes and ranks websites, it primarily uses the mobile version of the content for indexing and ranking. Historically, the desktop version of a page was used for indexing, but with the increasing dominance of mobile web browsing, search engines have shifted their approach.

In simple terms, this means that search engines look at websites the way they would be displayed on a mobile device, rather than a desktop computer. So, if your website has a mobile and a desktop version, the search engine will primarily use the mobile version for determining how it should appear in search results.

This approach reflects the reality that most people are now accessing the web via mobile devices. For website owners, this means that you need to make sure your site is mobile-friendly, offering a good user experience on smaller screens, with fast loading times and intuitive navigation. If a site doesn’t perform well on mobile, it might not rank as highly in search results, even if its desktop version is excellent.

Sample Issues to Check

- Is navigation easily accessible on mobile devices?

- Is the text size large enough to read without zooming?

- Are buttons large enough to easily click?

- Are there any elements that require the user to horizontally scroll to read?

- Is spacing between elements enough to provide a clean layout?

Site Structure and Navigation

Your website’s structure and navigation play a crucial role in helping both search engines and users navigate your site. It’s important to have clearly defined categories and subcategories that won’t leave users guessing at how to navigate deeper into your site.

Consider the example of a website dedicated to DIY home repairs, encompassing a range of topics like plumbing, flooring, windows, and painting. While it’s possible to group all articles under a generic ‘blog’ category, a more effective approach involves creating distinct subcategories for each home repair topic, which is a much better way to organize content.

For instance, a visitor interested in DIY flooring should be able to effortlessly locate a dedicated “DIY Flooring” section in the navigation menu. This section would exclusively house articles about flooring, streamlining the process to find relevant articles and eliminating the need to sift through unrelated content on other types of home repairs. In turn, this also helps search engines understand that all of the articles under “DIY Flooring” are related to one another and are focused on a particular topic.

Sample Issues to Check

- Does the website have a clear hierarchy of categories and subcategories?

- Are there any categories or subcategories that take more than 3-4 clicks to reach?

- Can the click path to reach a given category or subcategory be reduced?

- Are navigation menus easy to use?

- Do product pages, service pages, and blog posts make use of breadcrumbs?

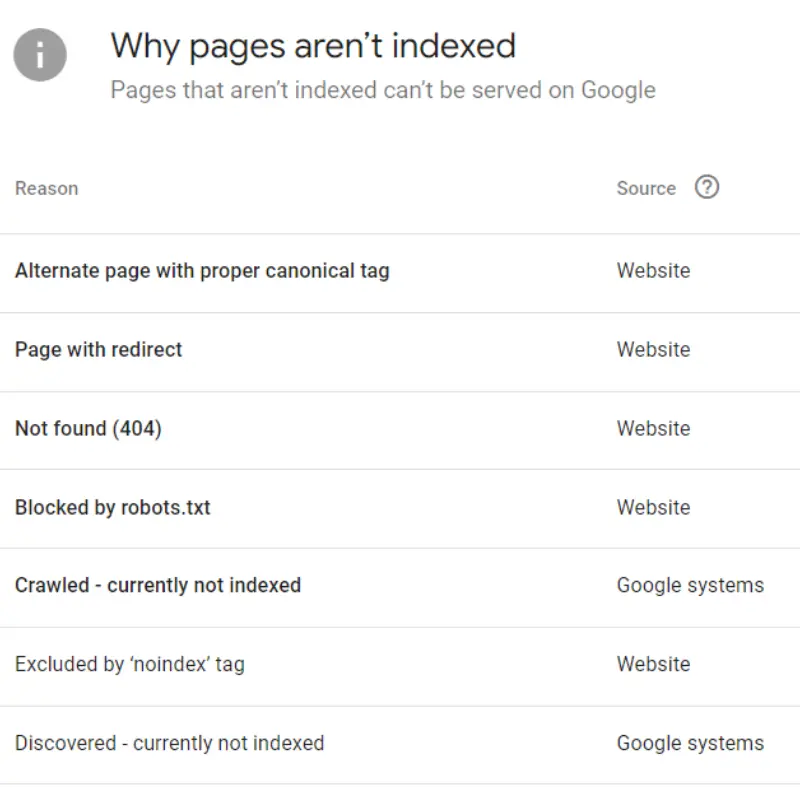

Google Search Console

Google Search Console (GSC) is a free tool provided by Google that acts as your source of truth for data related to your website. Beyond offering valuable insights into traffic, GSC provides a look into various other aspects of your site’s performance and health, such as indexing issues, crawl issues, manual actions, security issues, and user experience-related concerns. You’ll want to regularly check GSC for any issues, especially those related to crawling and indexing.

Sample Issues to Check

- Are all versions and subdomains of your website added?

- Are there any pages not indexed?

- Does the site have a manual action or security issues?

- Are there any crawl issues?

- Are there any non-secure (non-HTTPS) URLs?

Final Thoughts

Well, there you have it – the quick and dirty of why technical SEO is important for you to succeed in search and a glimpse into the audit process.

While an audit can last you anywhere from several months to a year, keep in mind that technical SEO is an ongoing process.

Websites get updated, links get broken, new pages are added, and old pages are deleted. This means that there’s the potential for new issues to arise, so periodic technical reviews are necessary to ensure everything is maintained.

We typically recommend running regular audits on a quarterly basis (large sites), semi-annual basis (medium sites), or annual basis (small sites).

0 Comments